A star tracker is a celestial reference device that recognizes star patterns, such as constellations, and estimates the orientation of a spacecraft using an on-board star camera. In the initialization of the tracker, typically, no other information other than the captured image is used and this operation mode is commonly referred to as the Lost-In-Space (LIS) mode. During the LIS mode, first, in a stage called star extraction, the point like locations in the image plane are found and afterwards, in the star identification stage, the detected point distributions are compared to a Star Catalog and the observed points are tried to be matched to the catalog stars. The attitude of the star tracker is computed from this collected correspondence information between the known star positions of the catalog and the image plane points.

For star identification, there are many possible methods used in the literature such as using inter-star angles, distribution pattern of the stars on the image plane, or using the calibrated device magnitudes and many others. For our implementation, we randomly group 4 stars and then compute a geometric signature from the location of these stars on the image plane. The extracted signature is invariant under scaling, rotation and permutation operations.

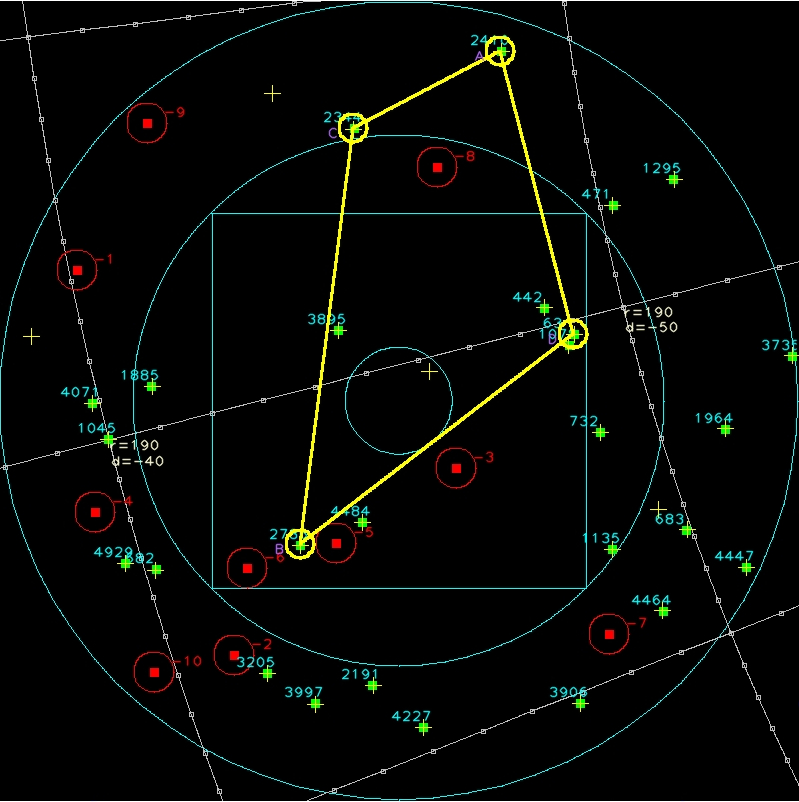

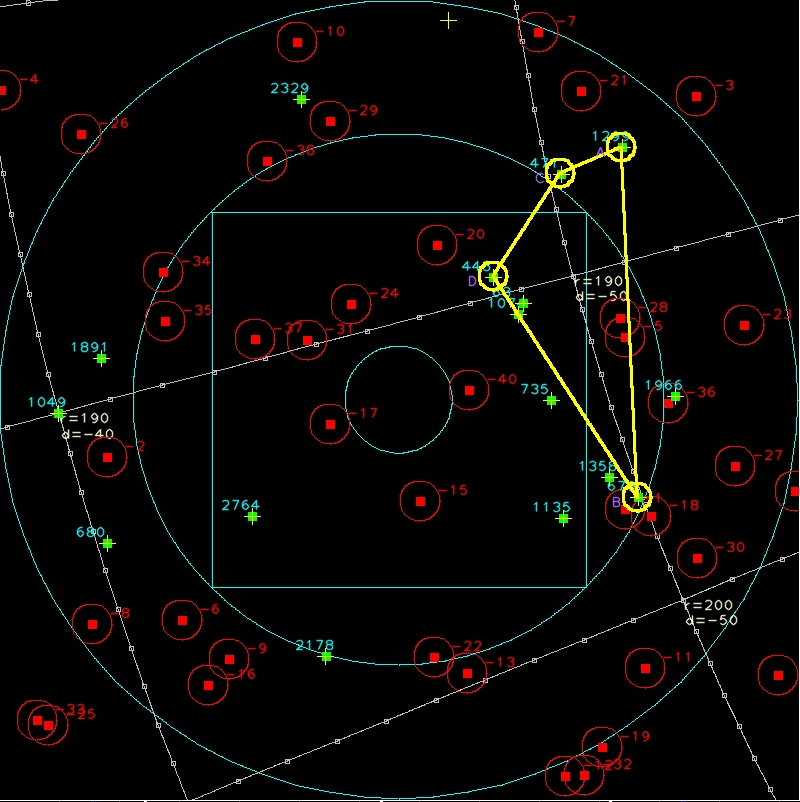

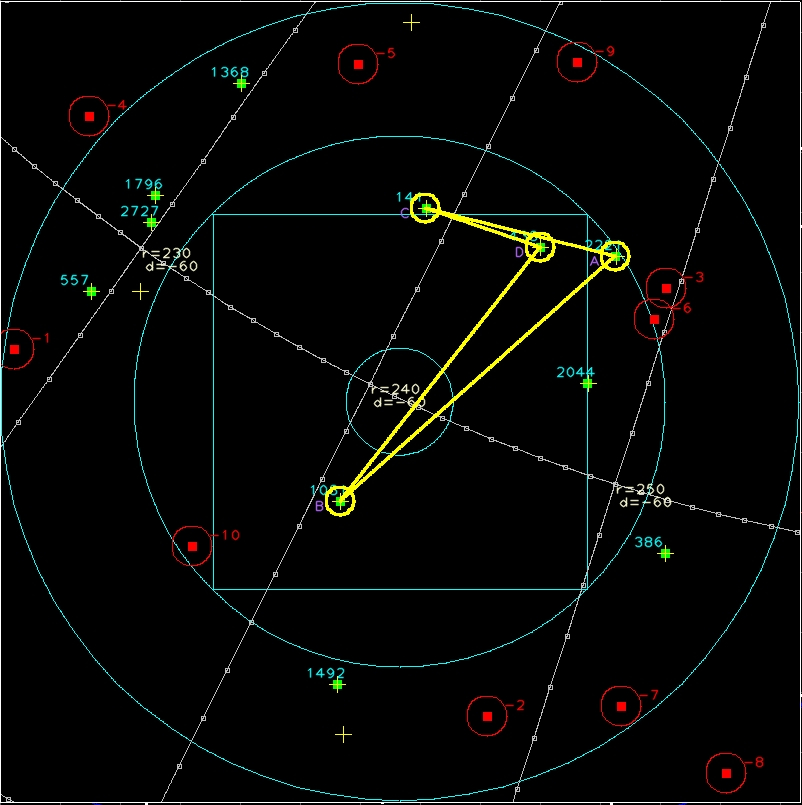

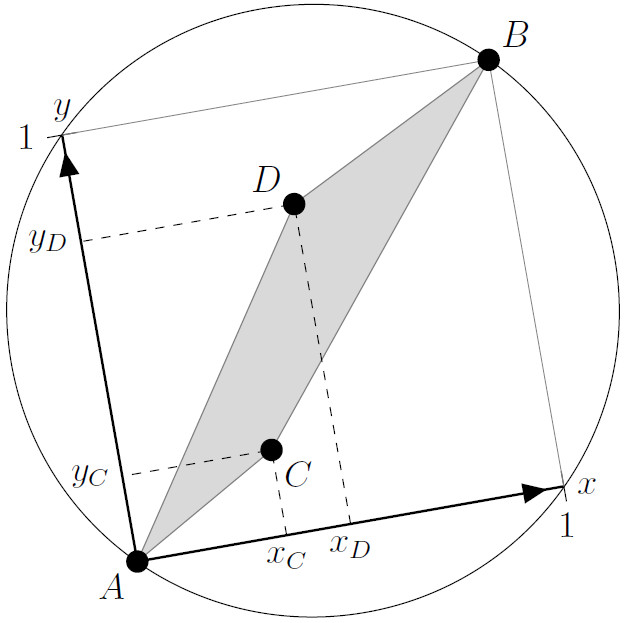

Fig. 1. (a) Geometric Hash Computation for stars A, B, C, D: Furthermost stars are selected to form the unit-diameter of a circle and a coordinate frame is defined so that A is at (0, 0) and B is at (1, 1). After applying the scaling and rotation transformations that place A and B to their respective places, the coordinates of stars C and D ( C(x) , C(y) , D(x) , D(y) ) become the geometric hash code that describe the distribution of stars for this quad.

Fig. 1. (a) Geometric Hash Computation for stars A, B, C, D: Furthermost stars are selected to form the unit-diameter of a circle and a coordinate frame is defined so that A is at (0, 0) and B is at (1, 1). After applying the scaling and rotation transformations that place A and B to their respective places, the coordinates of stars C and D ( C(x) , C(y) , D(x) , D(y) ) become the geometric hash code that describe the distribution of stars for this quad.

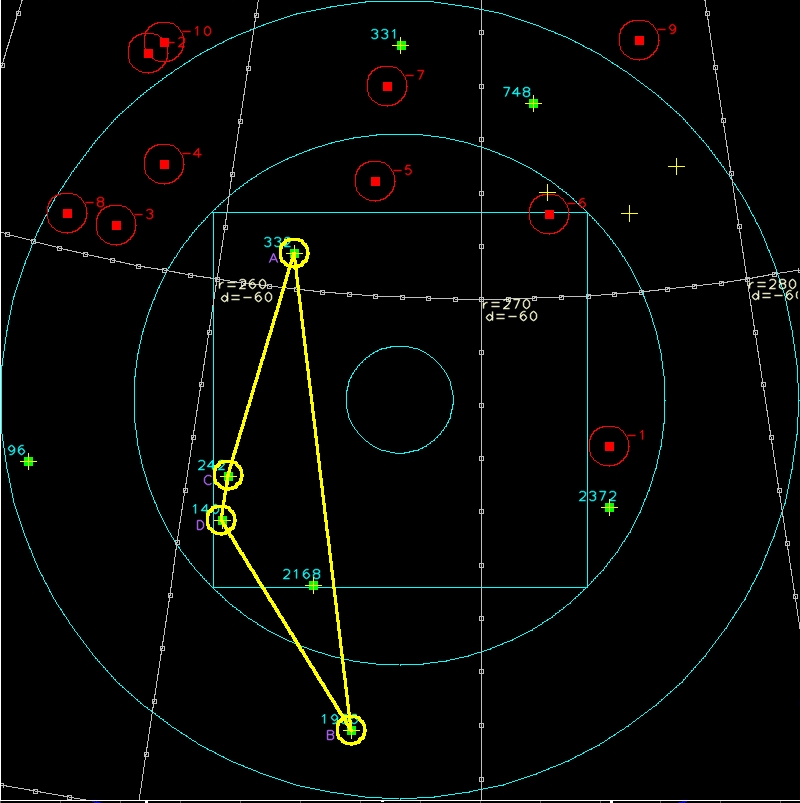

By using this signature and the known camera parameters (like the field of view) we index the star catalog to cover all possible looking directions. This is performed by rendering what a star-frame will look like in a given orientation using the catalog stars and then forming groups of stars visible in the viewport to compute geometric signatures. This results in an index-database that is formed by grouped star ids and their signature. During a query, first locations of the star-like regions are extracted from the query image and then these locations are used to form quads. The index-database is then queried using the extracted quads.

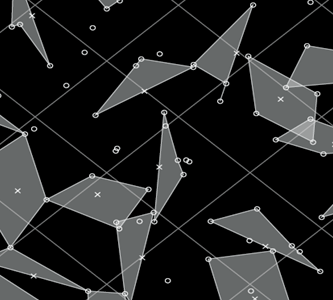

Fig. 2. A possible grouping of a star-frame viewport

There are a number of important factors to be considered here however:

- Radiation in space results false star-like images to be formed in the image sensor. Hence the star extraction algorithm needs to be robust against these false stars.

- Also, due to noise and again radiation, some actual star locations could not be extracted.

- Finally, in a typical star-tracker device, there usually is not a lot of RAM and the CPU is orders of magnitude slower than what is available in modern pcs due to power requirements.

Because of these reasons, it is necessary to index the star catalog in a manner to allow false stars, missing stars and under-represented viewports. We have an implementation that has a star-grouping algorithm that aims to generate star-groupings that are formed from actual stars even under heavy radiation and hence a large number of false-star images. Our experiments show a success rate of star identification of 98% for full-sky coverage when there is about 300% more false-stars in an image than there is actual stars.

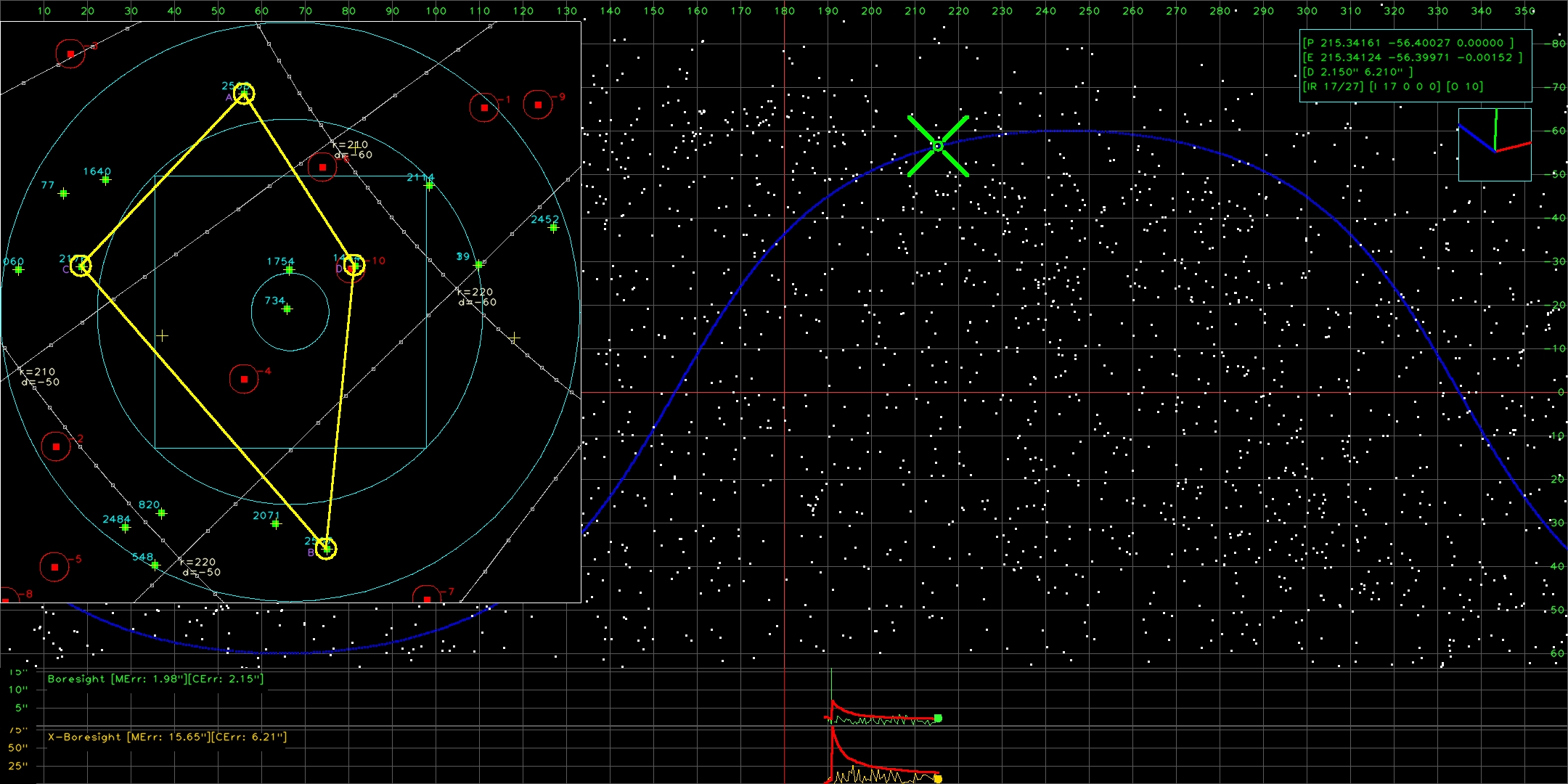

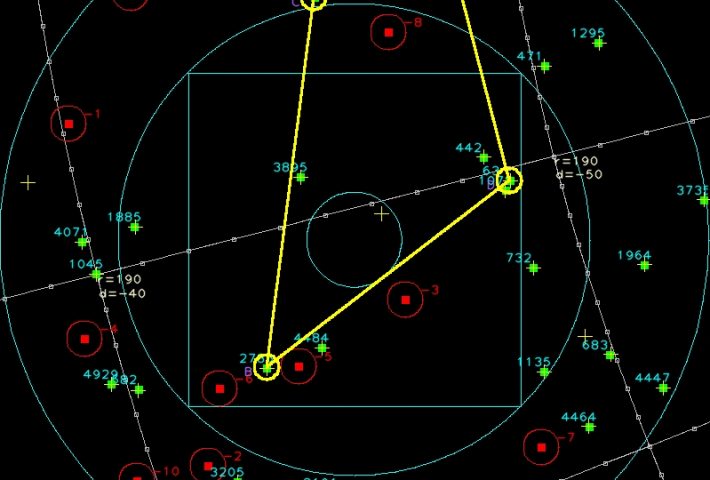

Below images show example star identification and attitude determination results with different simulation parameters:

Example #1

Related research is published in:

[1] Effects of Star Extraction Artifacts on Blind Attitude Determination, Engin Tola, Medeni Soysal To appear in International Conference on Image Processing, October 2014

[2] Yıldız Çıkarma Hatalarının Uydu Yönelimi Bulma Başarımı Üzerindeki Etkileri (Effects of Star Detection Errors on Attitude Determination Performance), Medeni Soysal, Engin Tola. In Turkish, IEEE Sinyal İşleme ve İletişim Uygulamaları, 2014